It was really nice to see the Stacks 3.0 upgrade go live earlier today!

I wanted to open up a discussion around: congestion in Stacks. How tenures factor into this, and how the Nakamoto upgrade (Stacks 3.0) and the fast blocks advertised with it have shaped user expectations.

Let’s dive into a few key points. I believe the core teams has given much more thought to this already and a lot of optimizations are already planned, I would love to see more specific info shared with the public, I am hoping this thread can help kick that off.

Current Block Dimensions and User Expectations

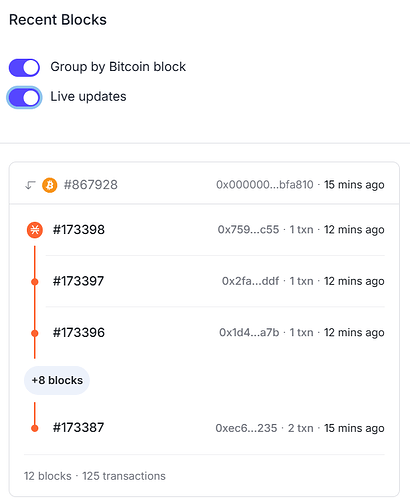

As some of you have noticed, congestion can occur during periods of high demand. Transactions with low fee can remain pending for a long time. We’ve confirmed that blocks can fill up quickly within a tenure, which then requires us to wait for the next Bitcoin block. Users have expected that with faster block arrivals in Stacks 3.0 (every 5-10 seconds), these blocks would also have the same maximum size as in Stacks 2.1. However, the tenure dimensions remain similar to previous versions, despite these faster arrivals. A tenure typically changes with a new bitcoin block.

This consistency in block dimensions is intentional. Although block arrival times have changed, we’re still working within a space where we must balance blockchain size growth with ensuring enough room for protocols and high-priority transactions.

Block Dimension Flexibility and Finding the Sweet Spot

The block dimensions in Stacks are somewhat flexible, and the design philosophy has always been to find a “sweet spot”—a balance between reasonable size growth and sufficient transaction capacity. Right now, the dimensions allow protocols to operate without overloading the blockchain’s size. Here’s a breakdown of block dimensions from Stacks 2.1, which serve as the basis for Stacks 3.0:

| Block Limit | Read-Only Limit | |

|---|---|---|

| Runtime | 5000000000 | 1000000000 |

| Read count | 15000 | 30 |

| Read length (bytes) | 100000000 | 100000 |

| Write count | 15000 | 0 |

| Write length (bytes) | 15000000 | 0 |

If a block hits the limit on any one of these dimensions, it’s considered full. That means in times of high demand, only smaller transactions (such as STX transfers) may fit into the remaining space for a tenure.

Room for Expansion and Technical Limits

This brings up a crucial question: How much room do we have to increase block size if congestion worsens?, and do we want to?

Technically, the block dimensions are changeable. However, altering these dimensions would need careful consideration to keep Stacks accessible and manageable for those running nodes or miners. There’s also the question of whether changing block dimensions would be a consensus-breaking change—this is something to consider if adjustments are proposed.

When I spoke with Jesse recently, he estimated that transactions would need to be around 10 times the current volume before the existing dimensions would become a bottleneck. That said, a well-functioning blockchain does need a functioning fee market, and block space will always be limited by design. The idea is to allow for high-priority transactions to find room, creating a natural market in times of high demand.

Miners are not yet rationing space it seems to optimize their profits as suggested here: test: testnet with heavy mempool activity · Issue #4538 · stacks-network/stacks-core · GitHub

Next Steps: Tenure Extensions and Block Size Considerations

A planned, non-consensus-breaking change is extending tenure duration when Bitcoin blocks are delayed by more than 10 minutes. This change, which has already been agreed upon to follow the Nakamoto Upgrade, would potentially offer more space when it’s needed most. This kind of “reset” to block space could address some of the waiting issues without increasing block dimensions directly, and I’d like to highlight the importance of prioritizing this now.

Let’s open up the discussion:

1 What else of this is part of the optimization roadmap already planned?

2 What flexibility is there when it comes to increasing the block dimensions or tenure dimensions?

3 What technical or economic factors should we consider to maintain a healthy fee market?

4 How do we balance enough block space for protocols building on Stacks with keeping the blockchain accessible?

- Should teams with protocols’ (i.e. ALEX) exceptions of user growth, and expected transactions per user factor in somehow if block dimensions are changed?

5 what are the alternatives for scaling without increasing block dimensions?

- Alternatively should we stimulate the use of subnets (Bitcoin L3) rather than changing block dimensions on Stacks (bitcoin L2)

- Clarity WASM upgrade, how will that influence existing protocols, does it require new contract deployments or will existing protocols benefit from this automatically?

- What can protocols do to decrease need for “block space”?